Code

library(tidymodels)

library(themis)

library(tidyverse)

library(skimr)

library(GGally)

library(readr)

library(vip) Tony Duan

January 29, 2023

[1] 50000 23Rows: 50,000

Columns: 23

$ hotel <fct> City_Hotel, City_Hotel, Resort_Hotel, R…

$ lead_time <dbl> 217, 2, 95, 143, 136, 67, 47, 56, 80, 6…

$ stays_in_weekend_nights <dbl> 1, 0, 2, 2, 1, 2, 0, 0, 0, 2, 1, 0, 1, …

$ stays_in_week_nights <dbl> 3, 1, 5, 6, 4, 2, 2, 3, 4, 2, 2, 1, 2, …

$ adults <dbl> 2, 2, 2, 2, 2, 2, 2, 0, 2, 2, 2, 1, 2, …

$ children <fct> none, none, none, none, none, none, chi…

$ meal <fct> BB, BB, BB, HB, HB, SC, BB, BB, BB, BB,…

$ country <fct> DEU, PRT, GBR, ROU, PRT, GBR, ESP, ESP,…

$ market_segment <fct> Offline_TA/TO, Direct, Online_TA, Onlin…

$ distribution_channel <fct> TA/TO, Direct, TA/TO, TA/TO, Direct, TA…

$ is_repeated_guest <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ previous_cancellations <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ previous_bookings_not_canceled <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ reserved_room_type <fct> A, D, A, A, F, A, C, B, D, A, A, D, A, …

$ assigned_room_type <fct> A, K, A, A, F, A, C, A, D, A, D, D, A, …

$ booking_changes <dbl> 0, 0, 2, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ deposit_type <fct> No_Deposit, No_Deposit, No_Deposit, No_…

$ days_in_waiting_list <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ customer_type <fct> Transient-Party, Transient, Transient, …

$ average_daily_rate <dbl> 80.75, 170.00, 8.00, 81.00, 157.60, 49.…

$ required_car_parking_spaces <fct> none, none, none, none, none, none, non…

$ total_of_special_requests <dbl> 1, 3, 2, 1, 4, 1, 1, 1, 1, 1, 0, 1, 0, …

$ arrival_date <date> 2016-09-01, 2017-08-25, 2016-11-19, 20…unbalance data

# A tibble: 2 × 3

children n prop

<fct> <int> <dbl>

1 children 4038 0.0808

2 none 45962 0.919 | Name | hotels |

| Number of rows | 50000 |

| Number of columns | 23 |

| _______________________ | |

| Column type frequency: | |

| Date | 1 |

| factor | 11 |

| numeric | 11 |

| ________________________ | |

| Group variables | None |

Variable type: Date

| skim_variable | n_missing | complete_rate | min | max | median | n_unique |

|---|---|---|---|---|---|---|

| arrival_date | 0 | 1 | 2015-07-01 | 2017-08-31 | 2016-08-29 | 793 |

Variable type: factor

| skim_variable | n_missing | complete_rate | ordered | n_unique | top_counts |

|---|---|---|---|---|---|

| hotel | 0 | 1 | FALSE | 2 | Cit: 30752, Res: 19248 |

| children | 0 | 1 | FALSE | 2 | non: 45962, chi: 4038 |

| meal | 0 | 1 | FALSE | 5 | BB: 38316, HB: 6399, SC: 4494, Und: 580 |

| country | 0 | 1 | FALSE | 155 | PRT: 14046, GBR: 6405, FRA: 5627, ESP: 4298 |

| market_segment | 0 | 1 | FALSE | 7 | Onl: 23760, Off: 10604, Dir: 7131, Gro: 5124 |

| distribution_channel | 0 | 1 | FALSE | 5 | TA/: 38349, Dir: 8083, Cor: 3459, GDS: 108 |

| reserved_room_type | 0 | 1 | FALSE | 9 | A: 34889, D: 8675, E: 3096, F: 1299 |

| assigned_room_type | 0 | 1 | FALSE | 10 | A: 27357, D: 12577, E: 3924, F: 1839 |

| deposit_type | 0 | 1 | FALSE | 3 | No_: 49839, Ref: 92, Non: 69 |

| customer_type | 0 | 1 | FALSE | 4 | Tra: 35343, Tra: 12430, Con: 1864, Gro: 363 |

| required_car_parking_spaces | 0 | 1 | FALSE | 2 | non: 45019, par: 4981 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| lead_time | 0 | 1 | 80.09 | 91.20 | 0.00 | 8.0 | 45.0 | 125 | 709 | ▇▂▁▁▁ |

| stays_in_weekend_nights | 0 | 1 | 0.93 | 1.00 | 0.00 | 0.0 | 1.0 | 2 | 19 | ▇▁▁▁▁ |

| stays_in_week_nights | 0 | 1 | 2.46 | 1.94 | 0.00 | 1.0 | 2.0 | 3 | 50 | ▇▁▁▁▁ |

| adults | 0 | 1 | 1.83 | 0.51 | 0.00 | 2.0 | 2.0 | 2 | 4 | ▁▂▇▁▁ |

| is_repeated_guest | 0 | 1 | 0.04 | 0.20 | 0.00 | 0.0 | 0.0 | 0 | 1 | ▇▁▁▁▁ |

| previous_cancellations | 0 | 1 | 0.02 | 0.29 | 0.00 | 0.0 | 0.0 | 0 | 13 | ▇▁▁▁▁ |

| previous_bookings_not_canceled | 0 | 1 | 0.20 | 1.80 | 0.00 | 0.0 | 0.0 | 0 | 72 | ▇▁▁▁▁ |

| booking_changes | 0 | 1 | 0.29 | 0.74 | 0.00 | 0.0 | 0.0 | 0 | 21 | ▇▁▁▁▁ |

| days_in_waiting_list | 0 | 1 | 1.57 | 14.79 | 0.00 | 0.0 | 0.0 | 0 | 379 | ▇▁▁▁▁ |

| average_daily_rate | 0 | 1 | 99.94 | 49.04 | -6.38 | 67.5 | 92.5 | 125 | 510 | ▇▆▁▁▁ |

| total_of_special_requests | 0 | 1 | 0.71 | 0.83 | 0.00 | 0.0 | 1.0 | 1 | 5 | ▇▁▁▁▁ |

# A tibble: 2 × 3

children n prop

<fct> <int> <dbl>

1 children 3027 0.0807

2 none 34473 0.919 # A tibble: 2 × 3

children n prop

<fct> <int> <dbl>

1 children 1011 0.0809

2 none 11489 0.919 recipe

holidays <- c("AllSouls", "AshWednesday", "ChristmasEve", "Easter",

"ChristmasDay", "GoodFriday", "NewYearsDay", "PalmSunday")

lr_recipe <-

recipe(children ~ ., data = hotel_other) %>%

step_date(arrival_date) %>%

step_holiday(arrival_date, holidays = holidays) %>%

step_rm(arrival_date) %>%

step_dummy(all_nominal_predictors()) %>%

step_zv(all_predictors()) %>%

step_normalize(all_predictors())model

workflow

CREATE THE GRID FOR TUNING

# A tibble: 5 × 1

penalty

<dbl>

1 0.0001

2 0.000127

3 0.000161

4 0.000204

5 0.000259# A tibble: 5 × 1

penalty

<dbl>

1 0.0386

2 0.0489

3 0.0621

4 0.0788

5 0.1

# A tibble: 15 × 7

penalty .metric .estimator mean n std_err .config

<dbl> <chr> <chr> <dbl> <int> <dbl> <chr>

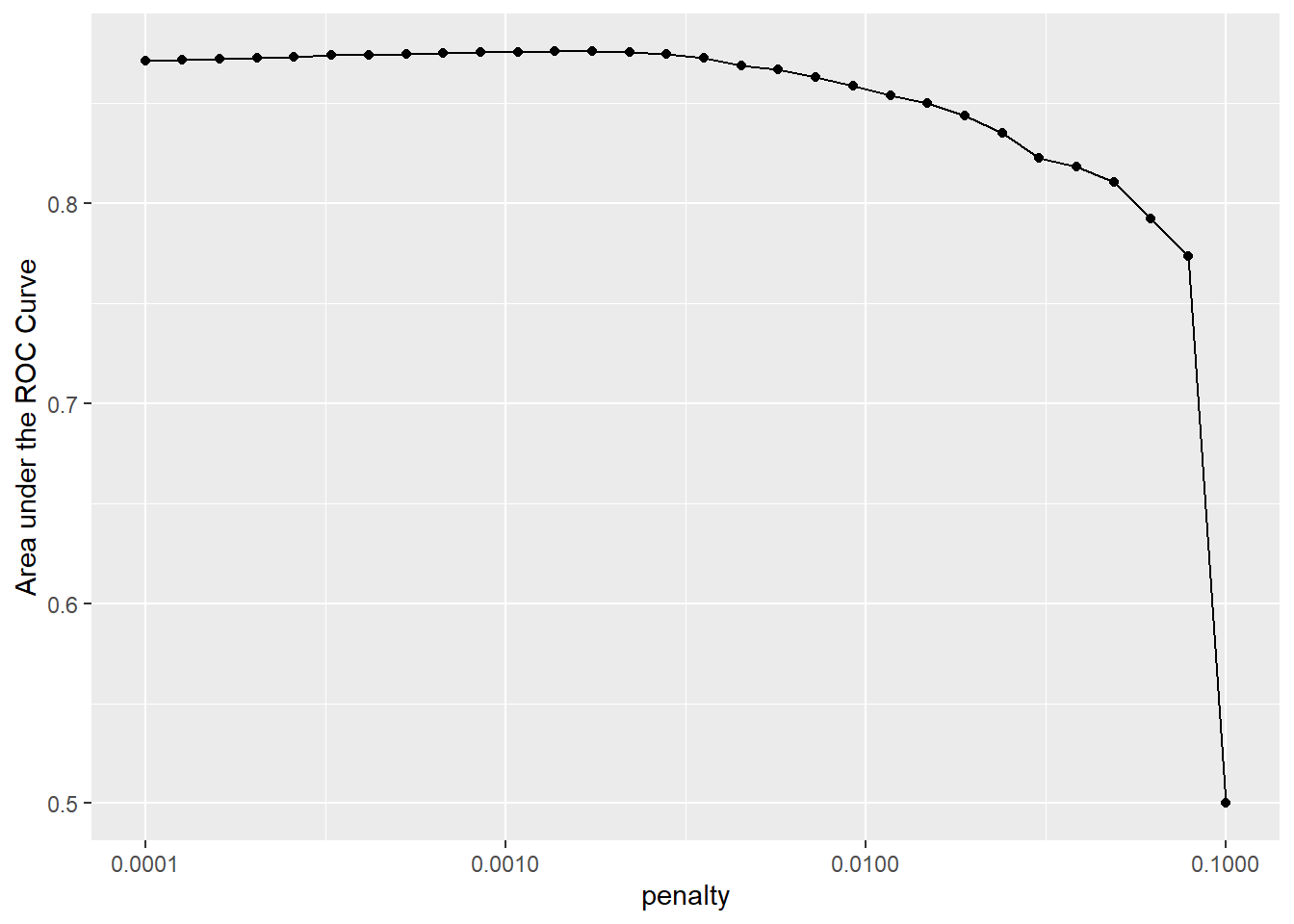

1 0.000127 roc_auc binary 0.872 1 NA Preprocessor1_Model02

2 0.000161 roc_auc binary 0.872 1 NA Preprocessor1_Model03

3 0.000204 roc_auc binary 0.873 1 NA Preprocessor1_Model04

4 0.000259 roc_auc binary 0.873 1 NA Preprocessor1_Model05

5 0.000329 roc_auc binary 0.874 1 NA Preprocessor1_Model06

6 0.000418 roc_auc binary 0.874 1 NA Preprocessor1_Model07

7 0.000530 roc_auc binary 0.875 1 NA Preprocessor1_Model08

8 0.000672 roc_auc binary 0.875 1 NA Preprocessor1_Model09

9 0.000853 roc_auc binary 0.876 1 NA Preprocessor1_Model10

10 0.00108 roc_auc binary 0.876 1 NA Preprocessor1_Model11

11 0.00137 roc_auc binary 0.876 1 NA Preprocessor1_Model12

12 0.00174 roc_auc binary 0.876 1 NA Preprocessor1_Model13

13 0.00221 roc_auc binary 0.876 1 NA Preprocessor1_Model14

14 0.00281 roc_auc binary 0.875 1 NA Preprocessor1_Model15

15 0.00356 roc_auc binary 0.873 1 NA Preprocessor1_Model16# A tibble: 1 × 7

penalty .metric .estimator mean n std_err .config

<dbl> <chr> <chr> <dbl> <int> <dbl> <chr>

1 0.00137 roc_auc binary 0.876 1 NA Preprocessor1_Model12

Random Forest Model Specification (classification)

Main Arguments:

mtry = tune()

trees = 1000

min_n = tune()

Engine-Specific Arguments:

num.threads = cores

Computational engine: ranger Collection of 2 parameters for tuning

identifier type object

mtry mtry nparam[?]

min_n min_n nparam[+]

Model parameters needing finalization:

# Randomly Selected Predictors ('mtry')

See `?dials::finalize` or `?dials::update.parameters` for more information.# A tibble: 3 × 8

mtry min_n .metric .estimator mean n std_err .config

<int> <int> <chr> <chr> <dbl> <int> <dbl> <chr>

1 18 29 roc_auc binary 0.922 1 NA Preprocessor1_Model1

2 24 8 roc_auc binary 0.921 1 NA Preprocessor1_Model3

3 1 18 roc_auc binary 0.871 1 NA Preprocessor1_Model2# A tibble: 1 × 3

mtry min_n .config

<int> <int> <chr>

1 18 29 Preprocessor1_Model1# A tibble: 22,500 × 8

id .pred_children .pred_none .row mtry min_n children .config

<chr> <dbl> <dbl> <int> <int> <int> <fct> <chr>

1 validation 0.187 0.813 13 18 29 none Preprocessor…

2 validation 0.0381 0.962 20 18 29 none Preprocessor…

3 validation 0.470 0.530 22 18 29 children Preprocessor…

4 validation 0.00824 0.992 23 18 29 none Preprocessor…

5 validation 0.00592 0.994 31 18 29 none Preprocessor…

6 validation 0.000445 1.00 38 18 29 none Preprocessor…

7 validation 0 1 39 18 29 none Preprocessor…

8 validation 0.00676 0.993 50 18 29 none Preprocessor…

9 validation 0.0346 0.965 54 18 29 none Preprocessor…

10 validation 0.0340 0.966 57 18 29 children Preprocessor…

# … with 22,490 more rowslast_rf_mod <-

rand_forest(mtry = 8, min_n = 7, trees = 1000) %>%

set_engine("ranger", num.threads = cores, importance = "impurity") %>%

set_mode("classification")

# the last workflow

last_rf_workflow <-

rf_workflow %>%

update_model(last_rf_mod)

# the last fit

set.seed(345)

last_rf_fit <-

last_rf_workflow %>%

last_fit(splits)

last_rf_fit# Resampling results

# Manual resampling

# A tibble: 1 × 6

splits id .metrics .notes .predict…¹ .workflow

<list> <chr> <list> <list> <list> <list>

1 <split [37500/12500]> train/test split <tibble> <tibble> <tibble> <workflow>

# … with abbreviated variable name ¹.predictions# A tibble: 2 × 4

.metric .estimator .estimate .config

<chr> <chr> <dbl> <chr>

1 accuracy binary 0.946 Preprocessor1_Model1

2 roc_auc binary 0.924 Preprocessor1_Model1https://www.youtube.com/watch?v=dbXDkEEuvCU

https://juliasilge.com/blog/hotels-recipes/

---

title: "[tidymodel]Modeling hotel bookings in R using tidymodels and recipes"

author: "Tony Duan"

date: "2023-01-29"

categories: [R]

execute:

warning: false

error: false

format:

html:

toc: true

code-fold: show

code-tools: true

---

{width="508"}

```{r}

library(tidymodels)

library(themis)

library(tidyverse)

library(skimr)

library(GGally)

library(readr)

library(vip)

library(glmnet)

library(ranger)

```

# 1. download data

```{r}

hotels <-

read_csv('https://tidymodels.org/start/case-study/hotels.csv') %>%

mutate(across(where(is.character), as.factor))

dim(hotels)

```

```{r}

glimpse(hotels)

```

# 2. plot

unbalance data

```{r}

hotels %>%

count(children) %>%

mutate(prop = n/sum(n))

```

```{r}

skim(hotels)

```

# 3. data preparation

```{r}

set.seed(123)

splits <- initial_split(hotels, strata = children)

hotel_other <- training(splits)

hotel_test <- testing(splits)

```

```{r}

hotel_other %>%

count(children) %>%

mutate(prop = n/sum(n))

```

```{r}

hotel_test %>%

count(children) %>%

mutate(prop = n/sum(n))

```

```{r}

set.seed(234)

val_set <- validation_split(hotel_other,

strata = children,

prop = 0.80)

val_set

```

# build model

recipe

```{r}

holidays <- c("AllSouls", "AshWednesday", "ChristmasEve", "Easter",

"ChristmasDay", "GoodFriday", "NewYearsDay", "PalmSunday")

lr_recipe <-

recipe(children ~ ., data = hotel_other) %>%

step_date(arrival_date) %>%

step_holiday(arrival_date, holidays = holidays) %>%

step_rm(arrival_date) %>%

step_dummy(all_nominal_predictors()) %>%

step_zv(all_predictors()) %>%

step_normalize(all_predictors())

```

model

```{r}

lr_mod <-

logistic_reg(penalty = tune(), mixture = 1) %>%

set_engine("glmnet")

```

workflow

```{r}

lr_workflow <-

workflow() %>%

add_model(lr_mod) %>%

add_recipe(lr_recipe)

```

CREATE THE GRID FOR TUNING

```{r}

lr_reg_grid <- tibble(penalty = 10^seq(-4, -1, length.out = 30))

lr_reg_grid %>% top_n(-5) # lowest penalty values

lr_reg_grid %>% top_n(5) # highest penalty values

```

# TRAIN AND TUNE THE MODEL

```{r}

lr_res <-

lr_workflow %>%

tune_grid(val_set,

grid = lr_reg_grid,

control = control_grid(save_pred = TRUE),

metrics = metric_set(roc_auc))

```

```{r}

lr_plot <-

lr_res %>%

collect_metrics() %>%

ggplot(aes(x = penalty, y = mean)) +

geom_point() +

geom_line() +

ylab("Area under the ROC Curve") +

scale_x_log10(labels = scales::label_number())

lr_plot

```

```{r}

top_models <-

lr_res %>%

show_best("roc_auc", n = 15) %>%

arrange(penalty)

top_models

```

```{r}

lr_best <-

lr_res %>%

collect_metrics() %>%

arrange(penalty) %>%

slice(12)

lr_best

```

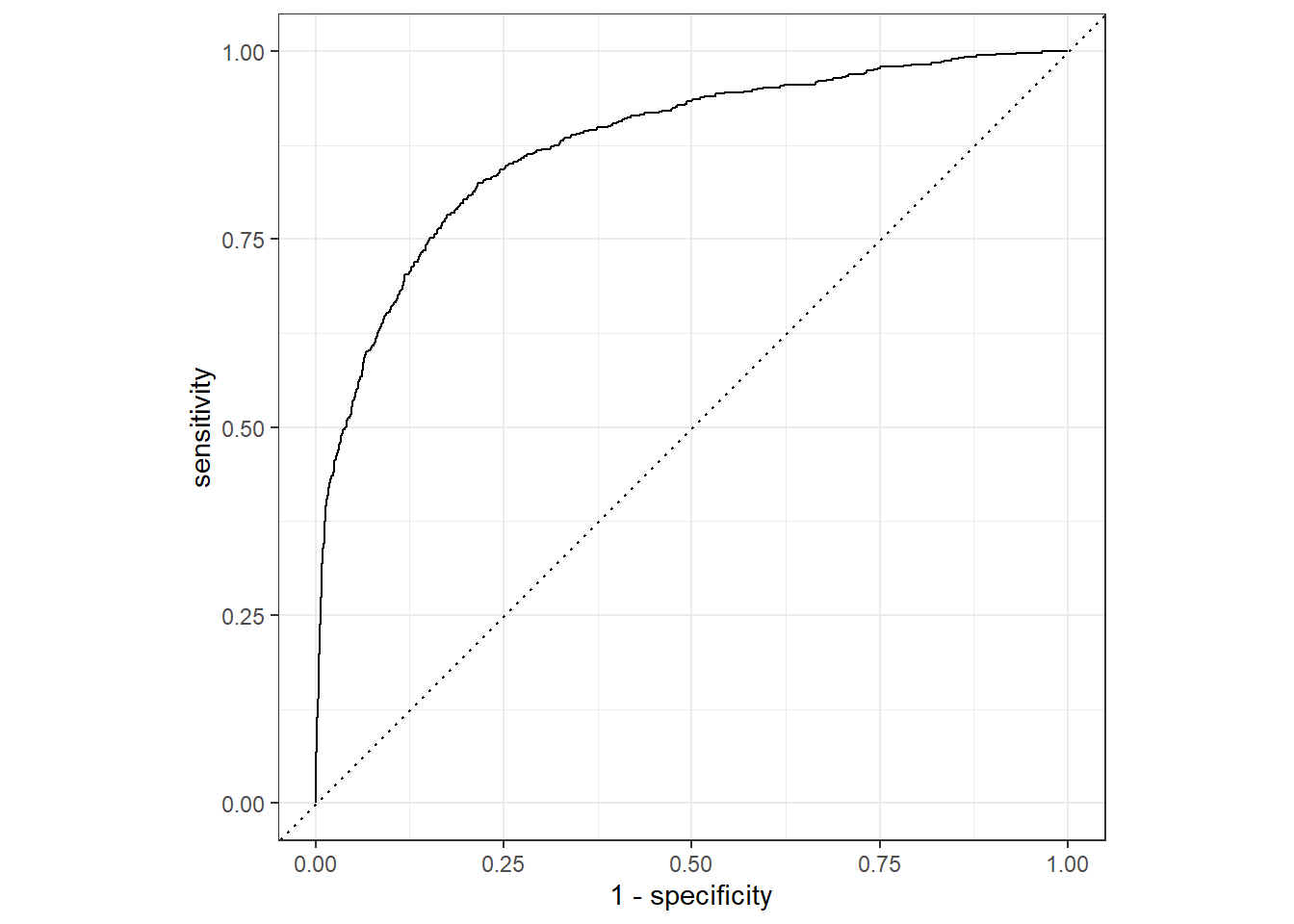

```{r}

lr_auc <-

lr_res %>%

collect_predictions(parameters = lr_best) %>%

roc_curve(children, .pred_children) %>%

mutate(model = "Logistic Regression")

autoplot(lr_auc)

```

## A SECOND MODEL: TREE-BASED ENSEMBLE

```{r}

cores <- parallel::detectCores()

cores

```

```{r}

rf_mod <-

rand_forest(mtry = tune(), min_n = tune(), trees = 1000) %>%

set_engine("ranger", num.threads = cores) %>%

set_mode("classification")

```

```{r}

rf_recipe <-

recipe(children ~ ., data = hotel_other) %>%

step_date(arrival_date) %>%

step_holiday(arrival_date) %>%

step_rm(arrival_date)

```

```{r}

rf_workflow <-

workflow() %>%

add_model(rf_mod) %>%

add_recipe(rf_recipe)

```

```{r}

rf_mod

```

```{r}

extract_parameter_set_dials(rf_mod)

```

```{r}

set.seed(345)

rf_res <-

rf_workflow %>%

tune_grid(val_set,

grid = 3,

control = control_grid(save_pred = TRUE),

metrics = metric_set(roc_auc))

#> i Creating pre-processing data to finalize unknown parameter: mtry

```

```{r}

rf_res %>%

show_best(metric = "roc_auc")

```

```{r}

autoplot(rf_res)

```

```{r}

rf_best <-

rf_res %>%

select_best(metric = "roc_auc")

rf_best

```

```{r}

rf_res %>%

collect_predictions()

```

```{r}

rf_auc <-

rf_res %>%

collect_predictions(parameters = rf_best) %>%

roc_curve(children, .pred_children) %>%

mutate(model = "Random Forest")

```

```{r}

bind_rows(rf_auc, lr_auc) %>%

ggplot(aes(x = 1 - specificity, y = sensitivity, col = model)) +

geom_path(linewidth = 1.5, alpha = 0.8) +

geom_abline(lty = 3) +

coord_equal() +

scale_color_viridis_d(option = "plasma", end = .6)

```

# last fit

```{r}

last_rf_mod <-

rand_forest(mtry = 8, min_n = 7, trees = 1000) %>%

set_engine("ranger", num.threads = cores, importance = "impurity") %>%

set_mode("classification")

# the last workflow

last_rf_workflow <-

rf_workflow %>%

update_model(last_rf_mod)

# the last fit

set.seed(345)

last_rf_fit <-

last_rf_workflow %>%

last_fit(splits)

last_rf_fit

```

```{r}

last_rf_fit %>%

collect_metrics()

```

```{r}

last_rf_fit %>%

extract_fit_parsnip() %>%

vip(num_features = 20)

```

```{r}

last_rf_fit %>%

collect_predictions() %>%

roc_curve(children, .pred_children) %>%

autoplot()

```

# Reference

https://www.youtube.com/watch?v=dbXDkEEuvCU

https://juliasilge.com/blog/hotels-recipes/